The AI doctors that love having a long conversation

OK, these AI chatbot examples are not fully fledged doctors. However, they do provide a very strong tool kit of help for a percentage of people, that need a little assistance or some initial triage for their symptoms. But where does the data go?

Ok, before the whole medical fraternity start jumping up and down, these AI chatbot examples are not fully fledged doctors. However, they certainly do provide a very strong tool kit of help for a large percentage of people, that just need a little assistance or some initial triage for their symptoms.

This seems to be a referring theme with the larger developments that have has some serious funding. In some cases the bots also serve as a simple assistant to a doctor, but how actual that is against theoretical is difficult to work out from the information that is available to me.

I see these as two distinctly different types of bot, diagnosis of ailments for a patient or assistance for a patient, such as drug reminders. They both have their place, but the investment and requirements for each are quite different.

As you will see the investment figures for the diagnosis bots are significant as the technology to ‘replace’ a medical professional has to be really significant. Most of these are using AI and Machine Learning powering some significant algorithms that can give a sensible answer to the patient about their symptoms.

The challenges are across the board, with an industry that is very young, there are simply not that many companies out there and a large proportion are in the US in a healthcare space that is dramatically different than the UK or some of the countries that have national healthy systems.

As I am sure you would expect, there are some significant investment figures flying about and this will only increase as the business models improve and can demonstrate strong profit lines against a technology space that is different to master.

Also there is a matter of the interop to existing healthcare systems that is the largest challenge in healthcare technology around the world at the moment. I will come onto this later, but it looks like all of these applications have their own data silos that are simply a barrier to health systems.

Your.MD

This one looks like the largest funded model that is about at the moment. They are truly trying to replace a huge proportion of the GP role with self diagnosis for the patient via their chat interface. They raised IRO £13m and have been working on this since 2012.

The model for these guys are working on is not just as a patient diagnosis but also to assist a clinician.

Such is the challenge for this type of application, that on the face of it (and using the Android app as a test) they have done a strong job on providing a diagnosis for simple ailments, but nothing more than a search on Google would have provided you with. When it becomes a little more serious, then we have a push out to a medical professional and that it does by pushing out to a website or two.

Clearly this is their work in progress and the natural language understanding is spot on. But I am a little disappointed with the integration into local healthcare data rather than launching a website that does the job. I know this is difficult, but they are probably getting friction from the large vendors in the sector such as EPIC or Cerner as this type of technology is starting to step on toes.

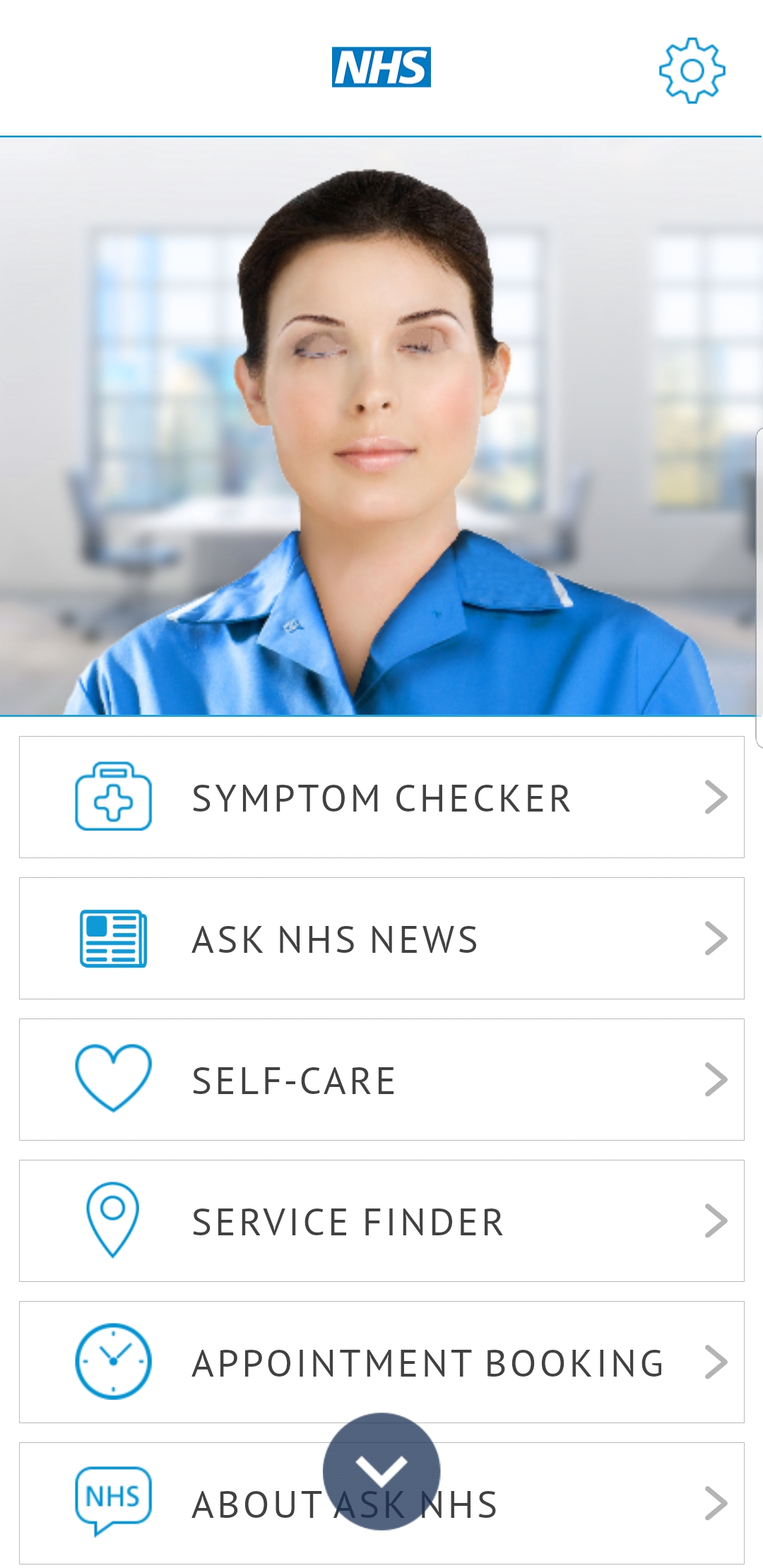

Sensely

Another big player in this market, Sensely have a similar offer to the Web MD model. They have an equally large investment IRO £9m. The difference here is that they are based in San Francisco in the US and it looks like they originally pushed this as a US offer with an announcement that they were collaborating with the US healthcare provider Mayo Clinic. However, there is also an NHS offer driven for just the UK market that is driven by the Sensely logic.

The platform uses algorithms trained on large volumes of clinical content, such as medical protocols and chronic disease information, to interpret patient symptoms and to recommend an appropriate diagnosis.

Powered by algorithms that are trained on large data sets from clinical content such as medical protocols and chronic disease information, the platform is designed to take your symptoms and recommend your diagnoses.

So a simple Q&A takes you through the symptom checker, but our own anecdotal run through, ended in just pushing to 111 for a call after a controlled bunch of questions. This was a simple knee pain example that I have from running, but it never got close to asking the right questions unfortunately.

This is designed around the 111 type service, but does not integrate in any form that I can detect. In fact, there seems to be an attempt to look for local services using GPS location, but either the 111 API does not give them the answer or it is not integrated successfully.

One nice touch with this is that they have given integrated voice into the application for those that do not wish to type and that works about as well as most NLP voice and good for accessibility.

There is supposed to be a clinician side to this application, but I could not see how that would be achieved as it did not integrate into anything that I could see from the interface.

So still early days for this one, but you can see what they are trying to achieve and this would be powerful once in place. But the issue is that this is labelled as Ask NHS and it simply does not do that as it is another siloed application.

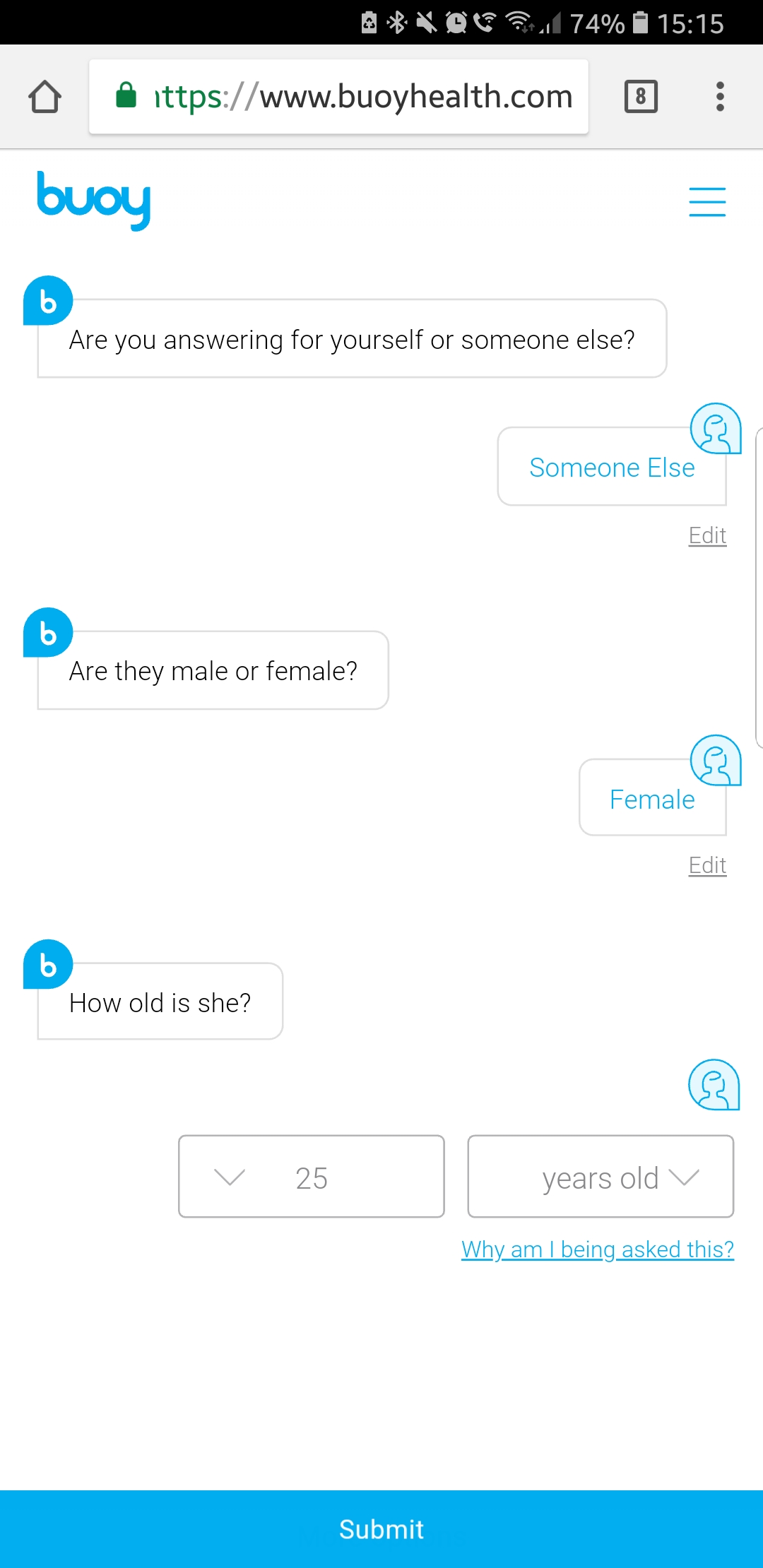

Buoy Health

Another large investment pot for these guys, raising just under £7m. Based in Boston, US there offer is another symptom checker that uses the AI component that is trained by a team of doctors and clinical data from 18,000 medical papers and also 5 million patient records.

So the data used here is significant and this is delivered in a different manner than the previous ones, but simply using their website - that is of course mobile friendly.

They came back with a strong response to my little knee pain test and although I am not sure they were right, they came to a diagnoses and pushed me to a doctor/physio.

There is no claim to integrate this with any care service, but it does provide a useful symptom checker service that is easy enough to access if you are online.

With strong, post diagnosis feedback, you can see they are learning and teaching their bot from some additional data that is not just based on their original data set, as they asked whether I thought this was a correct answer or if I felt it was off the mark.

Florence

This is a slightly different approach to some with the emphasis on goals not just symptoms. They did however, do some symptom checking when asked, she came back with some strong diagnosis, but letting me make the choices rather than a real diagnoses.

The stronger areas for Florence are medication reminders and overall health tracking, but of course you need to engage with that over a period to see how successful that would be for a user.

They have been around since 2016 and there are plenty of articles that mention ‘her’ in dispatches. Based in Germany, these guys were Beta testing in May 2017 and from MIT information 2,000 people a day were using her, so there is some traction with this one.

Her creator David Hawig, mentioned that she would need consistent training to optimise the functionality and you can see that in action in the style of the responses.

Florence is a health assistant rather than a triage nurse and that is an important difference in definition about where they are taking this technology. The nature of this technology is to be always available, well if that becomes your health coach, then we are in the realms of prevention rather than cure.

I feel the important step for the like of Florence is the integration into IOT data from wearables and the like. This would become a preventative critical friend that is looking after your wellbeing, maybe.

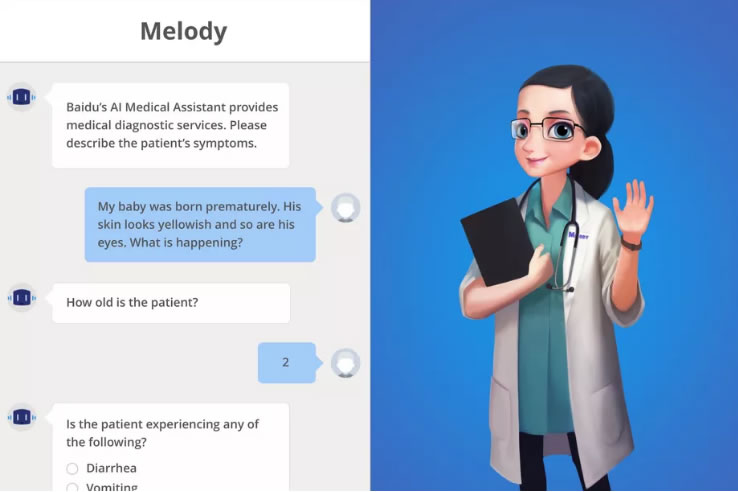

Melody - Baidu Doctor

Baidu have been looking at the healthy sector for some time and launched Melody in 2015. She allows users to contact local doctors and book appointments and ask questions, so it is acting much as the other diagnosis engines that we have seen.

She is only available via an app in China but the technology is aimed at assisting the healthcare industry globally according to Andrew Ng, one of the main stakeholders in the project. Famous for his courses via Stanford University, Dr Ng says that the Baidu bot interacts with millions of Chinese users, giving his technical team a mountain of data to refine and understand the conversation.

This bot is clearly a significant player in this market and of course with the backing of Baidu, they are creating a bot that is equivalent to Google Assistant or Alexa but for healthcare. Powerful stuff.

OK so who is reaching out to who?

The problem comes when you have all this AI project running, but with only half the story.

In the UK, we have the NHS that is our free at point of use healthcare service, not matter where we are in the UK or currently EU. Now that would suggest that a single citizen would have single digital record that any hospital in the UK could update. So if you happened to stub your toe in Glasgow or bang your head in London, you would naively expect that your record would reflect this!

This is sadly no where close, and in fact it is massively wide of the mark. In a recent openEHR conference held in Plymouth in the UK, they were discussing the task to solve this issue. Tomaz Gornik the CEO of Marand one of the OpenEHR vendors, reported that some UK hospitals can have IRO 1200 different specific software solutions. These solutions hold their data independently from the main hospital management system, so they cannot update any central record. As well as this the tragedy is that a hospital can holds its data separately from it’s next door neighbour. Madness in this supposedly central service!

So why is this important, well if you have a significant AI that is going to help a patient, exactly what are you going to integrate into to store an outcome or diagnoses or even the symptoms? It just is not possible.

The US have an even bigger problem, in that each hospital is a business that will look at a patient record as its own IP and its own data real estate.

OpenEHR is where this starts to clear the way for healthcare to start owning its data. University Hospitals Plymouth NHS Trust are one of the largest single trusts in the UK and Andy Blofield, Director of IM&T (CIO) is championing the plan to move to this open data standards for all their patient records. He stated at one of the workshops from the recent conference that he has put all their vendors on notice, that they will be required to integrate directly and leave data with the hospital trust in an OpenEHR format.

This means that the trust is never held to ransom for a patient record and the patient has been care across services, win win.

So for the commercial and clinician sector, this actually opens up the possibility for disruptive approaches in to integrate a specialised service or application and be part of the overall story for a patient. Does this mean the vendor space would change for the NHS?

So the future is bright for these AI doctors, but only if it can talk to your record and give you the best diagnoses with the other half of your story. It is possible, we now just need to get beyond the politics.